Google algorithms are complex sets of rules and processes that determine the relevance and ranking of web pages in search results. These algorithms are constantly evolving, with updates and changes occurring regularly to ensure the best possible user experience.

Over the years, Google has introduced numerous algorithm updates, each with their own specific focus and objectives. From Panda to BERT to the Page Experience update, each update has aimed to improve the quality and relevance of search results for users.

In this blog, we will be exploring some of the most significant Google algorithm updates of recent years, examining how they have impacted search rankings and what website owners and content creators can do to ensure their pages rank highly in search results. We will also be providing insights and analysis on the latest algorithm updates as they are released, helping you to stay up-to-date with the latest changes in the world of search.

What are Google Algorithms?

Google Algorithms are the backbone of any website owner, or SEO expert’s ability to deliver relevant and high-quality search results to its users. These complex sets of rules and processes are designed to analyse web pages and their content to determine their relevance and ranking in search results.

From the earliest algorithms like Panda and Penguin to the latest updates like Core Web Vitals and the Helpful Content Update, these algorithm updates have evolved over time to keep up with changes in user behaviour and technological advancements.

Here at Tillison, we understand that these algorithm updates can become very confusing, so to help clear up some of this confusion we have broken down each of Google’s current ranking signals:

- Page speed – Page speed refers to how fast a webpage loads and displays its content. It’s important because it impacts user experience and even search engine rankings.

- Location – Location is a Google ranking signal because it helps the search engine to provide relevant results to users based on their location. For instance, if a user searches for “coffee shops” on Google, the search engine will display coffee shops in the user’s local area first.

- Keywords – Keywords are words or phrases that users enter into search engines to find relevant content. They are an essential ranking factor for search engines like Google because they help determine the relevance of a webpage to a user’s query.

- Trustworthiness – There are several factors that contribute to a website’s trustworthiness, including the quality and accuracy of its content, its security and privacy measures, and the reputation and authority of its creators or owners.

- Expertise – Expertise is a ranking factor used by Google to evaluate the quality and relevance of content on a website. To improve their website’s expertise, website owners should focus on creating high-quality, original content that is well-researched and informative and demonstrates their expertise in the topic.

- Domain Authority – Domain authority is a Google ranking factor used to evaluate the overall authority and credibility of a website’s domain. It is determined by a number of factors, such as the quantity and quality of backlinks pointing to the domain, the relevance and accuracy of the content on the domain, and the overall user engagement and popularity of the domain.

- Mobile Friendliness – Mobile friendliness is a ranking factor used by Google to evaluate the usability and accessibility of a website on mobile devices. Websites that are designed to be mobile-friendly are more likely to rank higher in search results for users searching on mobile devices.

- Website Structure –

- Website Security –

Different algorithms focus on different aspects of Search. Some take aim at black-hat link building, while others aim to improve local searches. It all feeds into Google’s ethos to deliver the most relevant and reliable information, and make it universally accessible.

When a new algorithm is rolled out, SEO specialists have to react quickly. Each one requires a new approach and strategy to ensure that websites don’t lose rankings.

Helpful Content

2022 Helpful Content Update:

In August of 2022 Google rolled out their new algorithm that focused on “people first content.” This essentially means that Google Search Engines are trying to roll out content that is helpful and relevant to the user’s search, which is basically improving the user experience within search engines. Google’s main aim for this new algorithm update is to reward content creators who are primarily creating content for people, rather than for search engines in the hopes that the page will rank better.

It’s understandable that Google has taken a route like this, as one search engines should be giving helpful information to its users, but two, it also starts to crack down on some of the blackhat SEO methods that people are using in order to get their page’s ranking top in search results.

So how can you avoid the wrath of Google’s Helpful Content Update? It’s actually quite simple:

- Ask yourself whether the content you are creating will be useful and relevant to your intended audience.

- Do you have experience and knowledge within the sector you’re creating content for? If not make sure that you’re research comes from specialists and is properly cited within the text.

- Google also mention reading your content after and asking yourself if will users feel like they’ve learnt something that will allow them to achieve the goal in which they came to your blog to read about.

On the other hand, you want to avoid primarily catering your content to search engines, but with this said that doesn’t mean entirely. Here are a few things you could think about when creating your content:

- Not trying to rank high in search engines by creating lots of content with really different topics.

- Re-hashing what someone else has said in a blog post, or are you trying to add more value to a topic?

- Choosing popular topic ideas because they’re trending or getting a lot of traffic recently.

Are you trying to reach a specific word count hoping that Google will rank it higher? (Heads up, it won’t.)

Google’s algorithm history

2022 core update

May 25th, 2022 saw Google’s first big core update of the year. There hadn’t been any update like this for over 6 months, since November 2021. Algorithm updates for Google are a big deal, it means they are refining how its search engine interprets webpages, which impacts important factors such as your company’s ranking, traffic, revenue, and more.

This was a broad core update, which basically effects search engine ranking factors. However, it didn’t specify exactly what it was addressing, which is why Google call it a “broad” update. It’s not always like this, past updates such as Panda and Penguin were specifically targeting the quality of backlinks and content. Broad core updates gradually roll out and are important to a websites ranking.

What you can do (or keep doing)

To keep your website ranking where it should be there are a few basic practices that Google are likely to always consider when updating any algorithm.

Focus on E-A-T (Expertise, Authority, Trust)

- E-A-T is the acronym Google uses to describe the best practices that should be used to rank well on their search engines. When creating any content on your website, you should consider:

- a) demonstrating expertise in your field

- b) coming from a position of authority

- c) do readers to find you trustworthy?

- If the answer is consistently yes to E-A-T then you’ll have a better chance of ranking on Google no matter what algorithm update they throw at you!

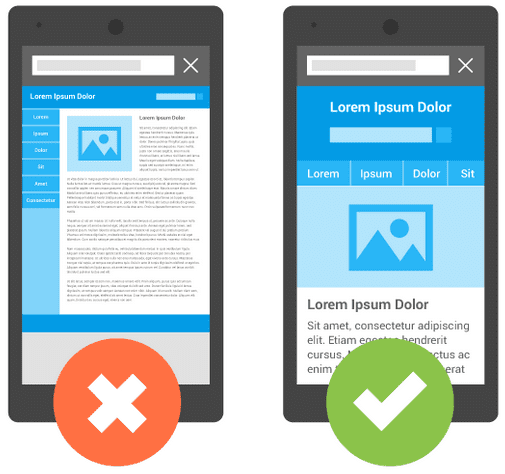

Keep your website Mobile-ready

- It’s no surprise that in 2022 the usability of a website is paramount to its success. It’s very likely that

- Mobile devices is where a large portion of your website visitors are coming from, so much so that Google now ranks a website based on it’s mobile website.

- With this in mind, you should always have a mobile-first mind set. If your website looks and works great on mobile, Google is more likely to favour this. Algorithm updates will continue to roll out and are very likely to always favour mobile devices.

Common traits after a Broad Core Website

Don’t fret, alot can happen after a broad core update from Google. Volatility in website rankings is usually one of them. If you saw your website rankings bounce all over the place in May-June 2022, it’s likely you weren’t alone. This happens because Google rolls out their algorithm updates gradually. As of now (July 2022) it does appear that the intensity of the update has leveled out.

It’s in your best interests to keep a close eye on your website rankings and other factors for a number of months after a broad core update. Do some research on how it could be affecting similar companies in your industry and work accordingly, and always keep on top of the basic practices Google requires in 2022.

Page experience update (2022)

Following on from Google’s emphasis on its core web vitals and page experience. It finally rolled out the 2021 update on desktop. The 2022 page experience update primarily affect ranking signals and the Core Web Vitals metrics. These are signals like the HTTPS connection that ensures your website has a secure connection and the absence of page elements that block the user’s view of the main page content.

November 2021 core update

On 17th November 2021, Google announced the rollout of its latest core update. Taking between one and two weeks to roll out, the broad core updates have a big impact, with rankings changing as new content and old content are assessed together. Sites that are affected can look to improve their rankings by analysing the content present on the site and ensuring it is of the highest quality.

This update had a relatively gentle effect on the search results, leading many to think it was primarily an infrastructure update. However, the update appears to have affected sectors including:

- Reference materials

- eCommerce

- Law and Government

- Health

- Stock photography

July 2021 core update

The second part of the broad core update was released on 1st July 2021 and finished the update from the beginning of the previous month. This was a smaller update than the June update, although changes were felt much more quickly than with the June update. These affected sectors including:

- Real estate

- Shopping

- Fitness

- Science

- Pets

- Animals

June 2021 spam update

Google implemented a two-part spam update on the 23rd and 28th June 2021 as part of their regular work to improve results. While it’s not clear exactly what kind of spam tactics Google was targeting, spam updates are a reminder to SEOs to avoid black hat tactics to avoid losing rankings in updates like this.

Page experience update (2021)

The page experience update was initiated on June 15th and rolled out through to the end of August. This update added to the Core Web Vitals, with cumulative layout shift (CLS) and was concerned with user experience.

June 2021 Core Update

The June 2021 Core Update was the first of two parts. The rest of the update was released in July 2021 because Google needed more time to finish it. It took 10 days for this part to roll out completely. The update saw sites with thin content being affected most as well as some specific sectors being hit. The sectors affected by this update include:

- Travel

- Health

- Auto and vehicles

- Science

- Pets

- Animals

Core Web Vitals (2021)

In June 2021, Google began to roll out its page experience factors as a ranking signal. This includes the addition of its Core Web Vitals. These are a set of measurable vitals that are good indicators of page experience. They consist of largest contentful paint (LCP), first input delay (FID), and cumulative layout shift (CLS). To get the full low down you give visit our blog.

However, it is worth noting that as the measures of page experience evolve over time so may the Core Web Vitals.

Product reviews update (2021)

People appreciate product reviews that share in-depth research, as opposed to thin content that only summarises a bunch of products. With this in mind, Google launched its product reviews update with the aim to reward high-quality review content. Separate from its regular core updates, the overall focus of the new algorithm was to give users content that provides insightful analysis and original research. Content is written by experts or enthusiasts who know the topic well.

Passage Ranking (2021)

Google made a breakthrough in being able to better understand the relevancy of specific passages. The search engine refers to the needle-in-a-haystack information that searchers may be looking for. Google’s new passage understanding capabilities means that it can display better results for a search query. It estimates that this will improve 7% of search queries worldwide.

Its systems are able to highlight featured snippets more easily, but it also has an impact on how it ranks web pages overall.

BERT (2019)

Impacting a staggering one in ten searches, this Google algorithm focused on natural language processing. Short for bidirectional encoder representations from transformers, BERT means that Google is able to figure out the full context of a word by looking at what comes before and after it. This marked a big improvement in interpreting search queries and intents.

This update also affects some featured snippets as it now takes prepositions into account. Results are now more accurate and relevant to searchers’ intents.

Medic (2018)

This broad core algorithm saw shifts in ranking, although it mainly affected medical sites. No one knows what the exact purpose of this Google algorithm was, but it’s thought that it was either an attempt to improve understanding of user intent or protect users from discreditable information.

Google openly admitted that there were no quick fixes for sites that lost rankings as a result of Medic. SEOs were told to focus on “building great content” as Medic was now “benefiting pages that were previously under-rewarded”.

There’s no “fix” for pages that may perform less well other than to remain focused on building great content. Over time, it may be that your content may rise relative to other pages.

— Google SearchLiaison (@searchliaison) March 12, 2018

Mobile Speed Update (2018)

While page speed was already a ranking factor for desktop searches, this update saw it become more important for mobile queries. This paved the way for mobile-first indexing.

Google said that it would only affect pages that “deliver the slowest experience to users”, and that sites with great quality could still rank high.

Possum (2016)

This update focused on local ranking. Local results began to depend more on the actual geographic location of the searcher and how the query was phrased, causing local SEO to become much more important.

Businesses located just outside towns and cities now saw themselves included in rankings for them. The physical location of the searcher also became more important.

RankBrain (2015)

A large step towards better understanding user intent, RankBrain used machine learning to make guesses about the meaning of words and find synonyms to give relevant results. Bill Slawksi described it like this:

“To an equestrian a horse is a large four-legged animal, to a carpenter, a horse has four legs, but it doesn’t live in fields or chew hay, to a gymnast a horse is something I believe you do vaults upon. With RankBrain context matters, and making sure you capture that context is possibly a key to optimising for this machine-learning approach.”

This meant that having individual pages optimised for keyword variants was well and truly dead. Good copy became optimised for keywords and synonyms, and Google began showing more results that were relevant for the whole keyword phrase.

Mobilegeddon (2015)

By 2015, more than 50% of Google search queries were coming from mobile devices. It was time for an algorithm update to reflect this. Enter Mobilegeddon.

Mobile-friendly pages were now given a ranking boost on mobile searches to reflect the shift in consumer behaviour. The update was rolled out worldwide, but Google made a point of saying that the “intent of the search query is still a very strong signal”. Great content could still outrank mobile-friendliness.

Pigeon (2014)

Local search received some attention with Google’s Pigeon algorithm in 2014. Affecting both results pages and Google Maps, Pigeon improved distance and location ranking parameters, and it emphasised the need for good local SEO.

As part of the update, seven-pack local listings began to be downsized. At the same time, organic ranking signals started carrying more weight if local businesses wanted to be featured.

Hummingbird (2013)

Hummingbird saw Google’s algorithms change direction. Affecting 90% of all searches, it brought semantic search to the fore to give searchers the answers they were looking for. More attention was given to each word in a query, meaning the whole phrase was taken into account. Hummingbird also laid the groundwork for voice search.

Optimising content for SEO changed as a result, and best practices began to include:

- Diversifying content length

- Producing visual content

- Using topic-appropriate language

- Using schema markup language

Pirate (2012)

While Google did release its search in Pirate, it’s also the name of an update from 2012. A signal in the rankings algorithm, its purpose was to demote sites with large numbers of valid copyright removal notices.

Instead of showing sites with illegal content, Google prioritised streaming services like Netflix and Amazon. However, the former could still be found in the SERPs with a bit of digging.

Penguin (2012)

Thought to be named after the Batman villain, Google’s Penguin algorithm saw keyword stuffers’ sites demoted. Quality was becoming the name of the game, and keyword stuffing and black-hat link building were now being punished.

While Penguin only considered a site’s incoming links, it still affected more than 3% of search results in its initial rollout. Four more versions followed over the next four years before it was finally added to the core algorithm in 2016. It had changed link building strategies forever.

Venice (2012)

A game-changer for local SEO, Venice aimed to “find results from a user’s city more reliably”. Local intent became much more important for relevant searches and was based on the location you’d set (because you could do that in 2012) or your IP address.

Small businesses were now able to rank for shorter keywords and optimising for local SEO became more common. However, it also gave rise to location stuffing. Similar to keyword stuffing, pages were filled with town and city names to make them rank higher.

Panda (2011)

If you remember the days of content farms and thin, low-quality content, you remember a time before this Google algorithm. The search engine decided to put an end to low-value, low-quality sites, permanently changing the world of SEO and rewarding original content.

Panda wasn’t a small update – it impacted 11.8% of search enquiries. The algorithm was built on 23 questions and human quality raters, and it got negative ranking signals from sites with:

- Thin, low-quality content

- Duplicate content

- A lack of authority and trustworthiness

- A high ad-to-content ratio

- Content that didn’t match the search query

Did any of these algorithms impact your rankings? Let us know in the comments or tweet us @TeamTillison.